本文主要讲解 ClickHouse S3 Engine 的读取写入性能代码 及 数量级调优

ClickHouse 如何性能调优

一 前文

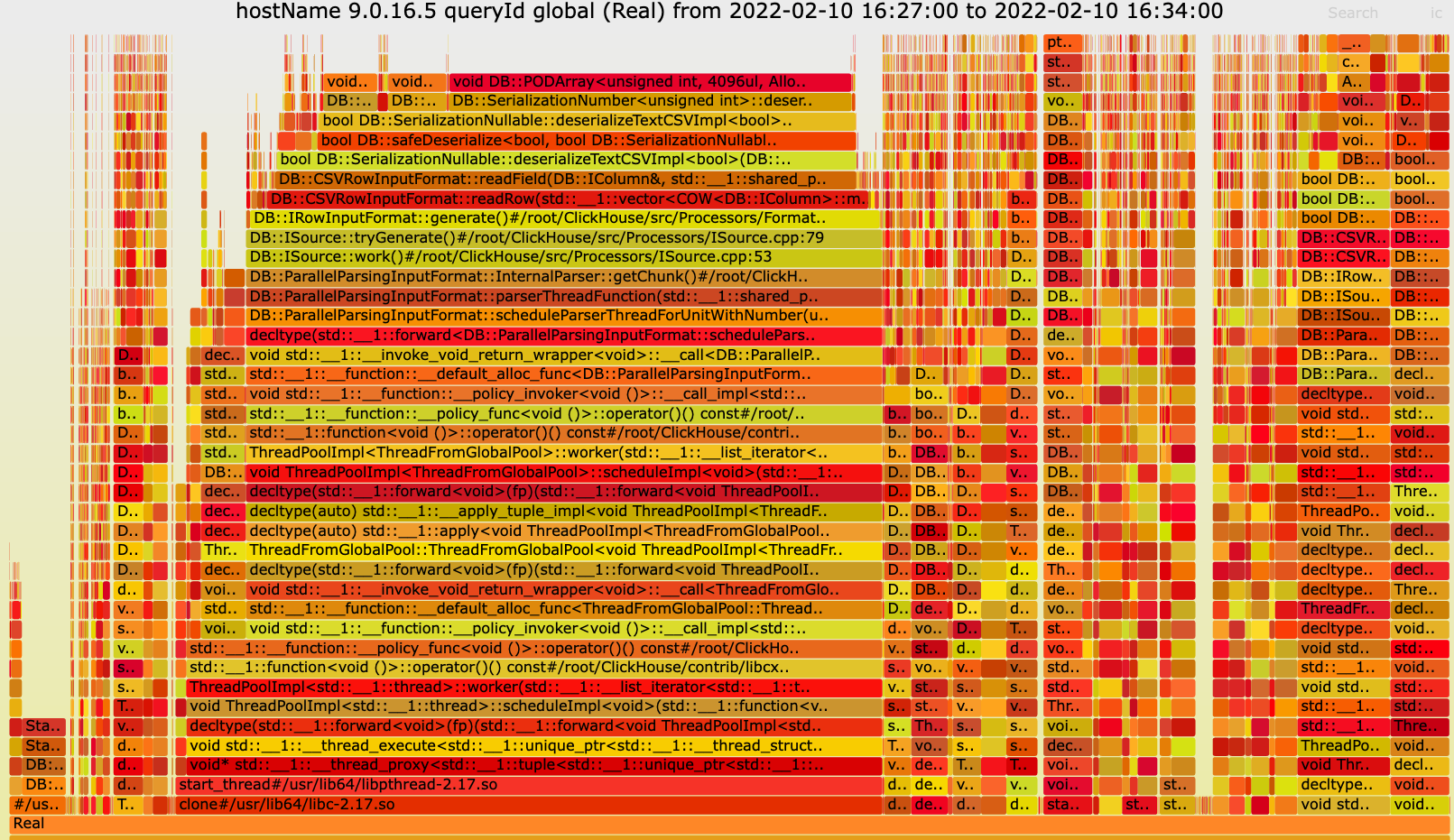

二 perf 调优

1 堆栈来源 trace_log

SELECT

count(),

arrayStringConcat(arrayMap(x -> concat(demangle(addressToSymbol(x)), '\n ', addressToLine(x)), trace), '\n') AS sym

FROM system.trace_log

WHERE query_id = '157d5b6d-fa06-4bed-94f3-01f1d5f04e24'

GROUP BY trace

ORDER BY count() DESC

LIMIT 102 ClickHouse 线程

[root@9 ~]# ps H -o 'tid comm' $(pidof -s clickhouse-server) | awk '{print $2}' | sort | uniq -c | sort -rn

230 ChunkParser

199 QueryPipelineEx

71 Segmentator

66 BgSchPool

9 clickhouse-serv

3 TCPHandler

3 SystemLogFlush

3 Formatter

2 ExterLdrReload

2 ConfigReloader

2 Collector3 异常堆栈

被调用多次

/root/ClickHouse/src/IO/BufferBase.h:82

void DB::readIntTextImpl<unsigned int, void, (DB::ReadIntTextCheckOverflow)0>(unsigned int&, DB::ReadBuffer&)

/root/ClickHouse/src/IO/BufferBase.h:95

void DB::readIntText<(DB::ReadIntTextCheckOverflow)0, unsigned int>(unsigned int&, DB::ReadBuffer&)

/root/ClickHouse/src/IO/ReadHelpers.h:393

std::__1::enable_if<is_integer_v<unsigned int>, void>::type DB::readText<unsigned int>(unsigned int&, DB::ReadBuffer&)

/root/ClickHouse/src/IO/ReadHelpers.h:902

void DB::readCSVSimple<unsigned int>(unsigned int&, DB::ReadBuffer&)

/root/ClickHouse/src/IO/ReadHelpers.h:988

std::__1::enable_if<is_arithmetic_v<unsigned int>, void>::type DB::readCSV<unsigned int>(unsigned int&, DB::ReadBuffer&)

/root/ClickHouse/src/IO/ReadHelpers.h:994

DB::SerializationNumber<unsigned int>::deserializeTextCSV(DB::IColumn&, DB::ReadBuffer&, DB::FormatSettings const&) const

/root/ClickHouse/src/DataTypes/Serializations/SerializationNumber.cpp:99

bool DB::SerializationNullable::deserializeTextCSVImpl<bool>(DB::IColumn&, DB::ReadBuffer&, DB::FormatSettings const&, std::__1::shared_ptr<DB::ISerialization const> const&)::'lambda'(DB::IColumn&)::operator()(DB::IColumn&) const

/root/ClickHouse/src/DataTypes/Serializations/SerializationNullable.cpp:377

bool DB::safeDeserialize<bool, bool DB::SerializationNullable::deserializeTextCSVImpl<bool>(DB::IColumn&, DB::ReadBuffer&, DB::FormatSettings const&, std::__1::shared_ptr<DB::ISerialization const> const&)::'lambda'()&, bool DB::SerializationNullable::deserializeTextCSVImpl<bool>(DB::IColumn&, DB::ReadBuffer&, DB::FormatSettings const&, std::__1::shared_ptr<DB::ISerialization const> const&)::'lambda'(DB::IColumn&)&, (bool*)0>(DB::IColumn&, DB::ISerialization const&, bool DB::SerializationNullable::deserializeTextCSVImpl<bool>(DB::IColumn&, DB::ReadBuffer&, DB::FormatSettings const&, std::__1::shared_ptr<DB::ISerialization const> const&)::'lambda'(DB::IColumn&)&, (bool*)0&&)

/root/ClickHouse/src/DataTypes/Serializations/SerializationNullable.cpp:193

bool DB::SerializationNullable::deserializeTextCSVImpl<bool>(DB::IColumn&, DB::ReadBuffer&, DB::FormatSettings const&, std::__1::shared_ptr<DB::ISerialization const> const&)

/root/ClickHouse/src/DataTypes/Serializations/SerializationNullable.cpp:409

DB::CSVRowInputFormat::readField(DB::IColumn&, std::__1::shared_ptr<DB::IDataType const> const&, std::__1::shared_ptr<DB::ISerialization const> const&, bool)

/root/ClickHouse/src/Processors/Formats/Impl/CSVRowInputFormat.cpp:404

DB::CSVRowInputFormat::readRow(std::__1::vector<COW<DB::IColumn>::mutable_ptr<DB::IColumn>, std::__1::allocator<COW<DB::IColumn>::mutable_ptr<DB::IColumn> > >&, DB::RowReadExtension&)

/root/ClickHouse/src/Processors/Formats/Impl/CSVRowInputFormat.cpp:236

DB::IRowInputFormat::generate()4 性能分析

三 优化

1 对战调用分析

2 UML 类关系及调用顺序

- 优化方案 调整 ReadFromS3 单线程读性能

3 调用堆栈

- ParallelParsingInputFormat.h

- ReadBuffer 解析

四 FileSegmentEngine

1 FileSegmentEngine Lambda

using FileSegmentationEngine = std::function<std::pair<bool, size_t>(

ReadBuffer & buf,

DB::Memory<Allocator<false>> & memory,

size_t min_chunk_bytes)>;2 ParallelParsingInputFormat.h

/// Function to segment the file. Then "parsers" will parse that segments.

FormatFactory::FileSegmentationEngine file_segmentation_engine; 3 注册

void registerFileSegmentationEngineCSV(FormatFactory & factory)

{

auto register_func = [&](const String & format_name, bool with_names, bool with_types)

{

size_t min_rows = 1 + int(with_names) + int(with_types);

factory.registerFileSegmentationEngine(format_name, [min_rows](ReadBuffer & in, DB::Memory<> & memory, size_t min_chunk_size)

{

return fileSegmentationEngineCSVImpl(in, memory, min_chunk_size, min_rows);

});

};

registerWithNamesAndTypes("CSV", register_func);

}

static std::pair<bool, size_t> fileSegmentationEngineCSVImpl(ReadBuffer & in, DB::Memory<> & memory, size_t min_chunk_size, size_t min_rows)

{

char * pos = in.position();

bool quotes = false;

bool need_more_data = true;

size_t number_of_rows = 0;

while (loadAtPosition(in, memory, pos) && need_more_data)

{

else if (*pos == '\n')

{

++number_of_rows;

//min_chunk_size min_rows 成为了过滤的关键

if (memory.size() + static_cast<size_t>(pos - in.position()) >= min_chunk_size && number_of_rows >= min_rows)

need_more_data = false;

++pos;

if (loadAtPosition(in, memory, pos) && *pos == '\r')

++pos;

}

}

saveUpToPosition(in, memory, pos);

return {loadAtPosition(in, memory, pos), number_of_rows};

}五 验收结果

初期优化后,因为使用DEBUG版本,Buffer 抽象非常高,处理非常的慢

预估单个线程 70MB/S

Debug mode

2022.02.16 10:22:02.465780 [ 2903129 ] {8f97a908-1ba6-4e25-911b-dbd4ac947bf9} <Trace> ParallelParsingInputFormat: Read S3 object index 33, segment size 10485813

2022.02.16 10:22:02.467560 [ 2903129 ] {8f97a908-1ba6-4e25-911b-dbd4ac947bf9} <Trace> ParallelParsingInputFormat: Read S3 object index 33, segment size 10485813 ,time 1 ,Start Parser!

2022.02.16 10:24:00.756938 [ 2903129 ] {8f97a908-1ba6-4e25-911b-dbd4ac947bf9} <Trace> ParallelParsingInputFormat: chunk.getNumRows() 65505 , time 118291

2022.02.16 10:24:24.289329 [ 2903129 ] {8f97a908-1ba6-4e25-911b-dbd4ac947bf9} <Trace> ParallelParsingInputFormat: chunk.getNumRows() 26287 , time 141823

2022.02.16 10:24:24.289850 [ 2903129 ] {8f97a908-1ba6-4e25-911b-dbd4ac947bf9} <Trace> ParallelParsingInputFormat: Read S3 object index 33, segment size 10485813 ,time 141824 ,Parser Finish!

2022.02.16 10:24:24.290132 [ 2903129 ] {8f97a908-1ba6-4e25-911b-dbd4ac947bf9} <Trace> ParallelParsingInputFormat: Read S3 object index 33 ,segment size 10485813 use time 141824

2022.02.16 10:25:18.579319 [ 2903129 ] {8f97a908-1ba6-4e25-911b-dbd4ac947bf9} <Trace> ParallelParsingInputFormat: Read S3 object index 15, segment size 10485783

2022.02.16 10:25:18.580013 [ 2903129 ] {8f97a908-1ba6-4e25-911b-dbd4ac947bf9} <Trace> ParallelParsingInputFormat: Read S3 object index 15, segment size 10485783 ,time 0 ,Start Parser!

2022.02.16 10:25:56.587206 [ 2903129 ] {8f97a908-1ba6-4e25-911b-dbd4ac947bf9} <Trace> ParallelParsingInputFormat: chunk.getNumRows() 65505 , time 38007

2022.02.16 10:26:31.826019 [ 2903129 ] {8f97a908-1ba6-4e25-911b-dbd4ac947bf9} <Trace> ParallelParsingInputFormat: chunk.getNumRows() 26371 , time 73246

2022.02.16 10:26:31.826507 [ 2903129 ] {8f97a908-1ba6-4e25-911b-dbd4ac947bf9} <Trace> ParallelParsingInputFormat: Read S3 object index 15, segment size 10485783 ,time 73247 ,Parser Finish!

2022.02.16 10:26:31.826747 [ 2903129 ] {8f97a908-1ba6-4e25-911b-dbd4ac947bf9} <Trace> ParallelParsingInputFormat: Read S3 object index 15 ,segment size 10485783 use time 73247 INFO Mode

2022.02.16 10:25:44.211472 [ 323178 ] {61cbaecf-335d-4deb-baae-51f14cb153e5} <Trace> ParallelParsingInputFormat: Read S3 object index 8, segment size 10485834

2022.02.16 10:25:44.211557 [ 323178 ] {61cbaecf-335d-4deb-baae-51f14cb153e5} <Trace> ParallelParsingInputFormat: Read S3 object index 8, segment size 10485834 ,time 0 ,Start Parser!

2022.02.16 10:25:44.303112 [ 323178 ] {61cbaecf-335d-4deb-baae-51f14cb153e5} <Trace> ParallelParsingInputFormat: chunk.getNumRows() 65505 , time 91

2022.02.16 10:25:44.345420 [ 323178 ] {61cbaecf-335d-4deb-baae-51f14cb153e5} <Trace> ParallelParsingInputFormat: chunk.getNumRows() 26292 , time 133

2022.02.16 10:25:44.345469 [ 323178 ] {61cbaecf-335d-4deb-baae-51f14cb153e5} <Trace> ParallelParsingInputFormat: Read S3 object index 8, segment size 10485834 ,time 134 ,Parser Finish!

2022.02.16 10:25:44.345490 [ 323178 ] {61cbaecf-335d-4deb-baae-51f14cb153e5} <Trace> ParallelParsingInputFormat: Read S3 object index 8 ,segment size 10485834 use time 134

2022.02.16 10:25:44.368619 [ 323178 ] {61cbaecf-335d-4deb-baae-51f14cb153e5} <Trace> ParallelParsingInputFormat: Read S3 object index 17, segment size 10485789

2022.02.16 10:25:44.368691 [ 323178 ] {61cbaecf-335d-4deb-baae-51f14cb153e5} <Trace> ParallelParsingInputFormat: Read S3 object index 17, segment size 10485789 ,time 0 ,Start Parser!

2022.02.16 10:25:44.457678 [ 323178 ] {61cbaecf-335d-4deb-baae-51f14cb153e5} <Trace> ParallelParsingInputFormat: chunk.getNumRows() 65505 , time 89

2022.02.16 10:25:44.491163 [ 323178 ] {61cbaecf-335d-4deb-baae-51f14cb153e5} <Trace> ParallelParsingInputFormat: chunk.getNumRows() 26322 , time 122

2022.02.16 10:25:44.491205 [ 323178 ] {61cbaecf-335d-4deb-baae-51f14cb153e5} <Trace> ParallelParsingInputFormat: Read S3 object index 17, segment size 10485789 ,time 122 ,Parser Finish!

2022.02.16 10:25:44.491227 [ 323178 ] {61cbaecf-335d-4deb-baae-51f14cb153e5} <Trace> ParallelParsingInputFormat: Read S3 object index 17 ,segment size 10485789 use time 122

2022.02.16 10:25:44.513499 [ 323178 ] {61cbaecf-335d-4deb-baae-51f14cb153e5} <Trace> ParallelParsingInputFormat: Read S3 object index 26, segment size 10485847

2022.02.16 10:25:44.513551 [ 323178 ] {61cbaecf-335d-4deb-baae-51f14cb153e5} <Trace> ParallelParsingInputFormat: Read S3 object index 26, segment size 10485847 ,time 0 ,Start Parser!

2022.02.16 10:25:44.595114 [ 323178 ] {61cbaecf-335d-4deb-baae-51f14cb153e5} <Trace> ParallelParsingInputFormat: chunk.getNumRows() 65505 , time 81

2022.02.16 10:25:44.628066 [ 323178 ] {61cbaecf-335d-4deb-baae-51f14cb153e5} <Trace> ParallelParsingInputFormat: chunk.getNumRows() 26318 , time 114

2022.02.16 10:25:44.628102 [ 323178 ] {61cbaecf-335d-4deb-baae-51f14cb153e5} <Trace> ParallelParsingInputFormat: Read S3 object index 26, segment size 10485847 ,time 114 ,Parser Finish!

2022.02.16 10:25:44.628122 [ 323178 ] {61cbaecf-335d-4deb-baae-51f14cb153e5} <Trace> ParallelParsingInputFormat: Read S3 object index 26 ,segment size 10485847 use time 114 六 性能

1 官方测试结果 查询

2 优化后测试结果 查询

3 优化 写入

4 优化后写入

七 测试数据

1 Schema

CREATE TABLE lineorder

(

LO_ORDERKEY UInt32,

LO_LINENUMBER UInt8,

LO_CUSTKEY UInt32,

LO_PARTKEY UInt32,

LO_SUPPKEY UInt32,

LO_ORDERDATE Date,

LO_ORDERPRIORITY LowCardinality(String),

LO_SHIPPRIORITY UInt8,

LO_QUANTITY UInt8,

LO_EXTENDEDPRICE UInt32,

LO_ORDTOTALPRICE UInt32,

LO_DISCOUNT UInt8,

LO_REVENUE UInt32,

LO_SUPPLYCOST UInt32,

LO_TAX UInt8,

LO_COMMITDATE Date,

LO_SHIPMODE LowCardinality(String)

)

ENGINE = MergeTree PARTITION BY toYear(LO_ORDERDATE) ORDER BY (LO_ORDERDATE, LO_ORDERKEY);2 单表测试模型

CREATE TABLE default.s3_1

(

`LO_ORDERKEY` UInt32,

`LO_LINENUMBER` UInt8,

`LO_CUSTKEY` UInt32,

`LO_PARTKEY` UInt32,

`LO_SUPPKEY` UInt32,

`LO_ORDERDATE` Date,

`LO_ORDERPRIORITY` LowCardinality(String),

`LO_SHIPPRIORITY` UInt8,

`LO_QUANTITY` UInt8,

`LO_EXTENDEDPRICE` UInt32,

`LO_ORDTOTALPRICE` UInt32,

`LO_DISCOUNT` UInt8,

`LO_REVENUE` UInt32,

`LO_SUPPLYCOST` UInt32,

`LO_TAX` UInt8,

`LO_COMMITDATE` Date,

`LO_SHIPMODE` LowCardinality(String)

)

ENGINE = S3('http://xxx/insert01/s3_engine_1.csv', 'xxx', 'xxx', 'CSV')

3 多表测试模型

CREATE TABLE default.s3_5

(

`LO_ORDERKEY` UInt32,

`LO_LINENUMBER` UInt8,

`LO_CUSTKEY` UInt32,

`LO_PARTKEY` UInt32,

`LO_SUPPKEY` UInt32,

`LO_ORDERDATE` Date,

`LO_ORDERPRIORITY` LowCardinality(String),

`LO_SHIPPRIORITY` UInt8,

`LO_QUANTITY` UInt8,

`LO_EXTENDEDPRICE` UInt32,

`LO_ORDTOTALPRICE` UInt32,

`LO_DISCOUNT` UInt8,

`LO_REVENUE` UInt32,

`LO_SUPPLYCOST` UInt32,

`LO_TAX` UInt8,

`LO_COMMITDATE` Date,

`LO_SHIPMODE` LowCardinality(String)

)

ENGINE = S3('http://xxx/insert01/s3_engine_{1..5}.csv', 'xxx', 'xxx', 'CSV')

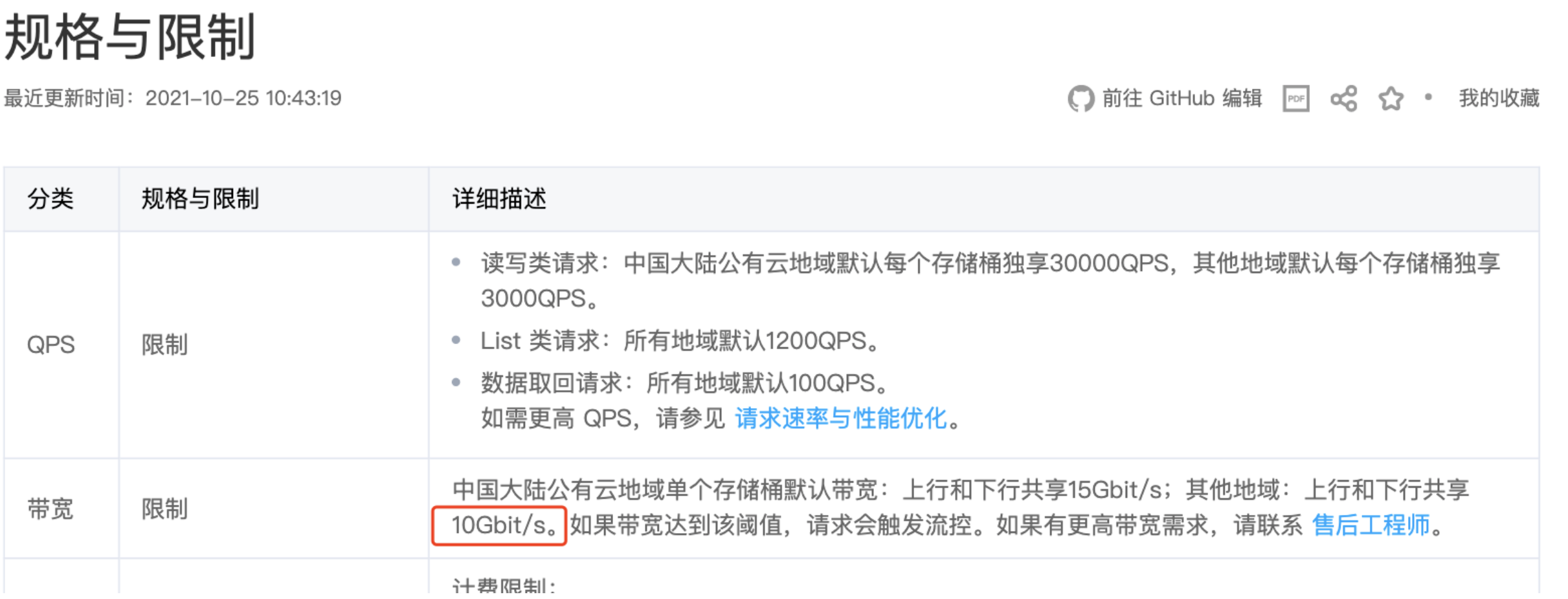

4 腾讯云COS 规格与限制

5 网络代码测试结果

- 左侧为优化后 网络性能 基本可以打满 COS 带宽

- 右侧为优化前 网络性能

6 不同数据量下 网络带宽测试

希望能给学习ClickHouse 的同学带来帮助!

代码不太适合公布!欢迎大家使用 腾讯云 ClickHouse ,感谢!

原文地址:https://cloud.tencent.com/developer/article/1953046

版权声明:本文内容由互联网用户自发贡献,该文观点与技术仅代表作者本人。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如发现本站有涉嫌侵权/违法违规的内容, 请发送邮件至 dio@foxmail.com 举报,一经查实,本站将立刻删除。